AI Compliance Assistant

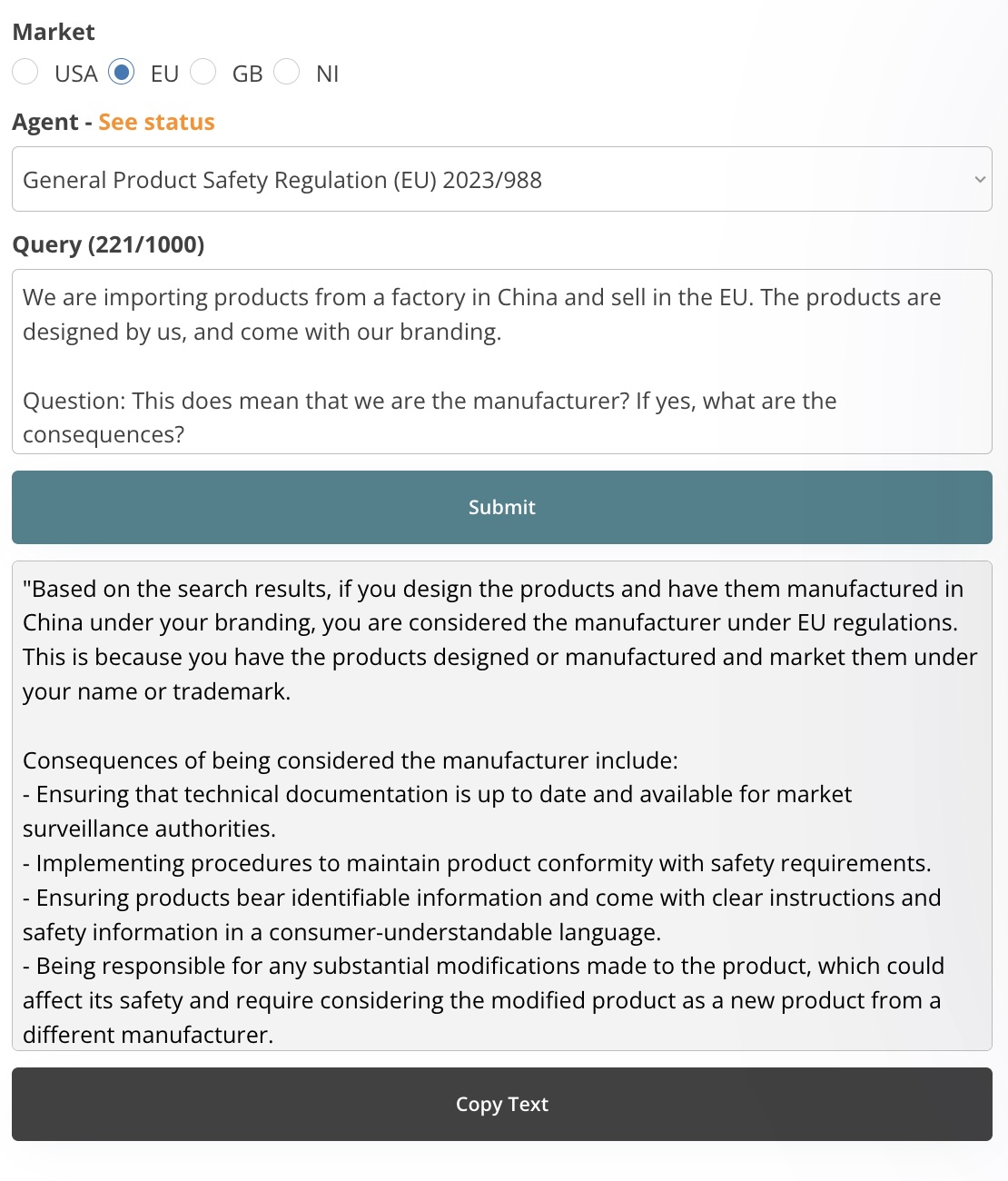

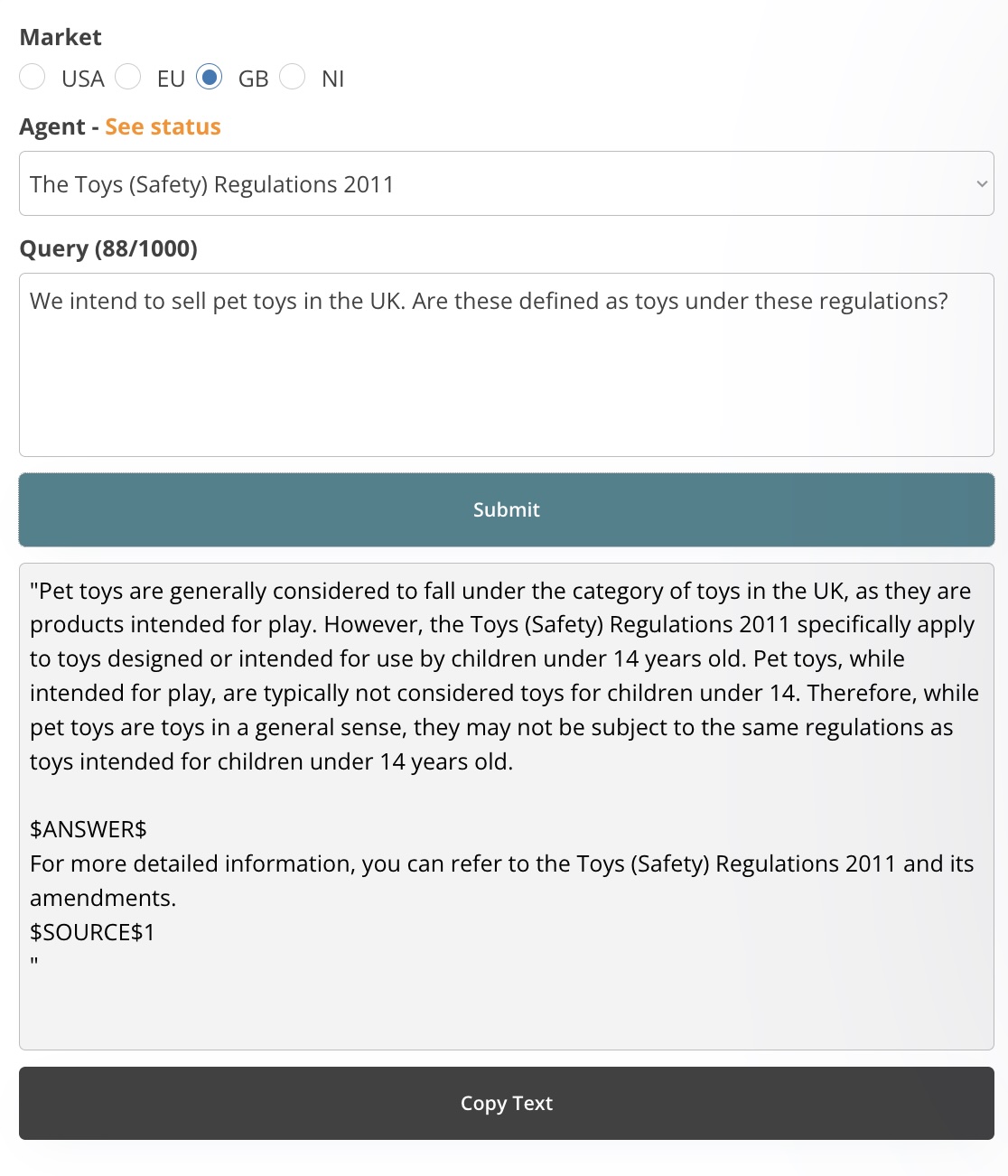

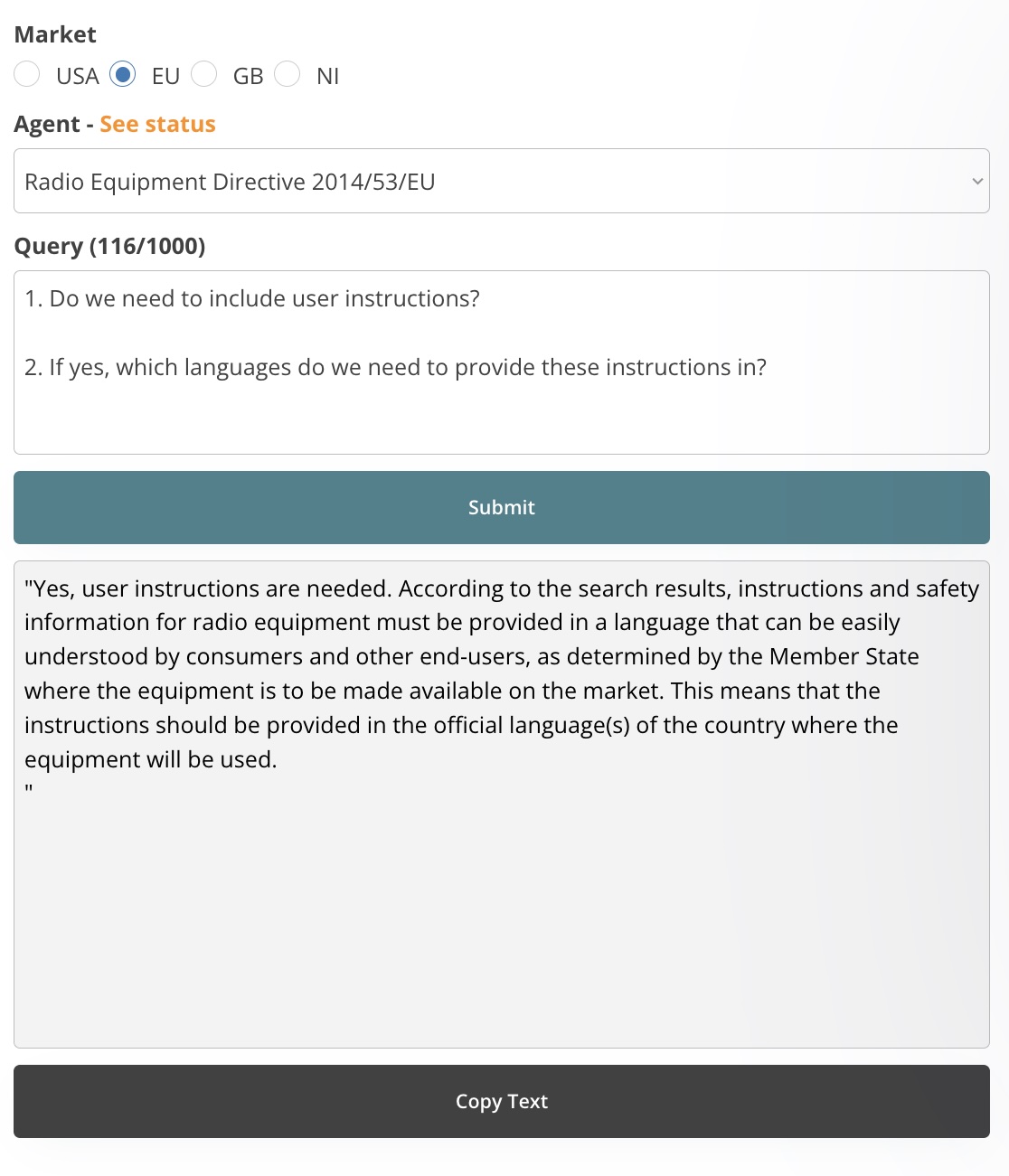

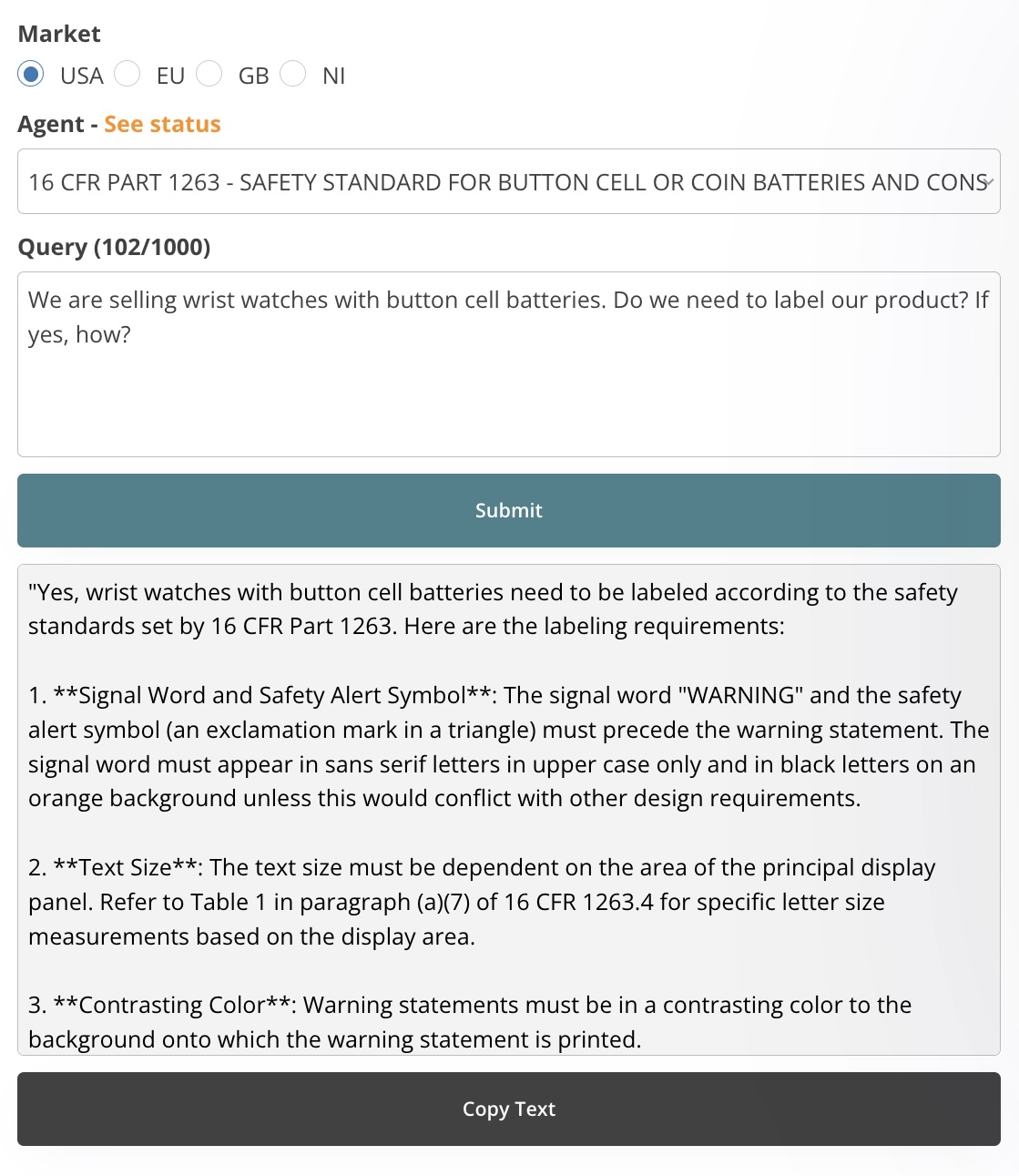

The AI Compliance Assistant allows you to ask questions and receive answers based on what’s written in

the actual product regulations. Think of it as having a conversation with the regulation text itself.

Answers based on a knowledge base

Answers based on a knowledge base Instant replies and 24/7 availability

Instant replies and 24/7 availability Ask as many questions as you want

Ask as many questions as you want

Covered countries/markets

Which regulations are covered?

You can find a list of regulations for which we have built an AI agent in the Monthly Review Methodology documents below.

What is an AI agent?

The Compliance Assistant is a collection of multiple specialised AI agents. An AI agent is a Large Language Model (LLM) interface connected to a knowledge base. The knowledge base contains regulation texts, guidance documents, and other relevant documentation.

The AI agent searches the knowledge base for relevant information and uses this to generate a more relevant output.

How is this different from ChatGPT?

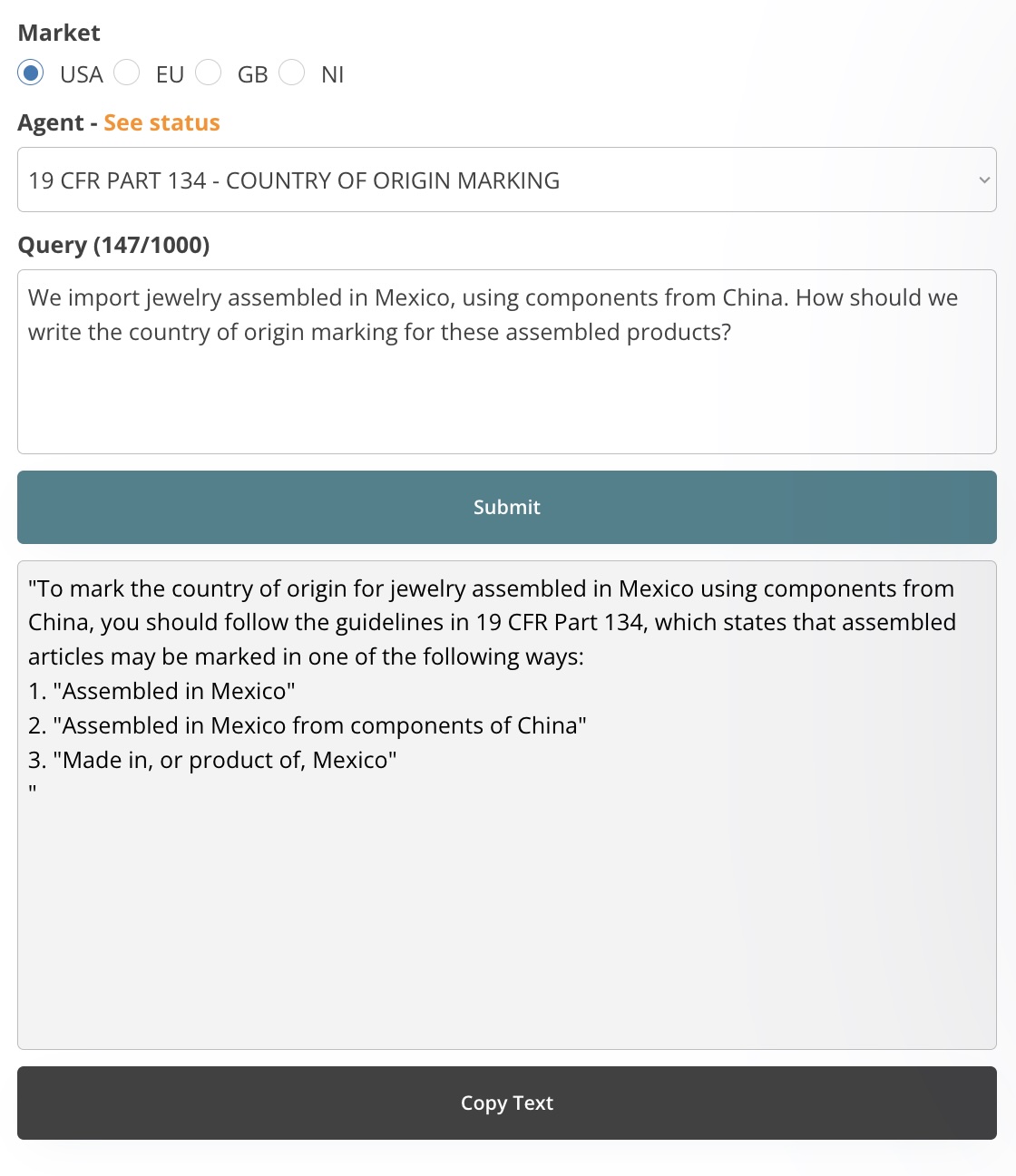

When you ask a question, the AI agent searches for an answer in a knowledge base consisting of EU, UK, and US regulations.

As such, the response is normally based on what is actually written in the relevant regulation – not general training data.

In practice, you can achieve this with ChatGPT and other LLMs as well. However, you would need to find the regulatory documents, upload them one by one, and then write a prompt instructing the LLM to answer based on the source text.

With the Compliance Assistant, you get that out of the box.

How often is the knowledge base updated?

We update the documentation in April of each year. Hence, we update the documents in the knowledge bases every 12 months.

Risk Disclosure

The Risk Disclosure document explains the features of the Compliance Gate Platform, and their limitations and risks.

AI Tool White Paper

Prompt guidelines

1. Write clear and focused questions (ideally, one at a time).

Example: What are the substances restricted under the RoHS Directive?

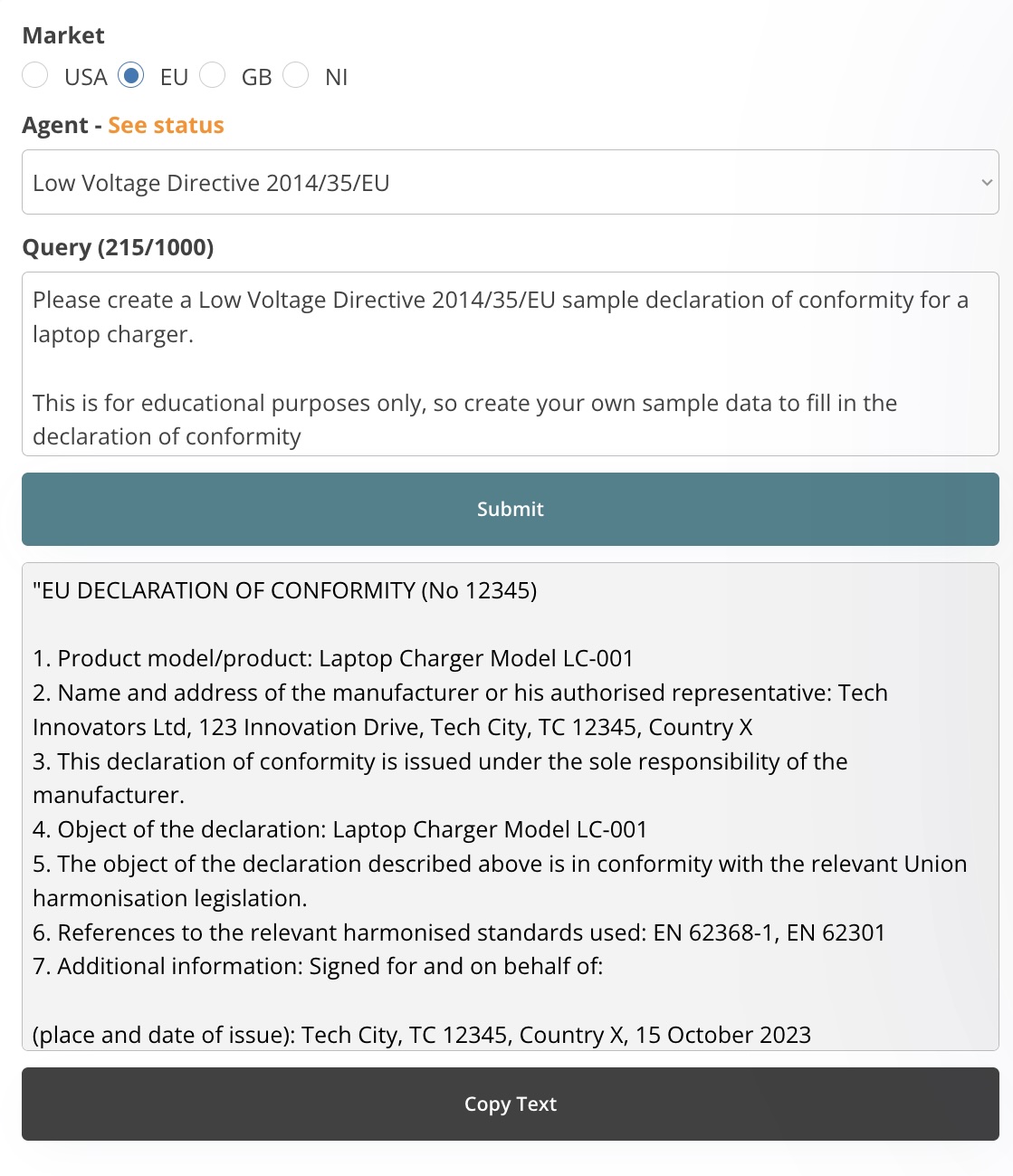

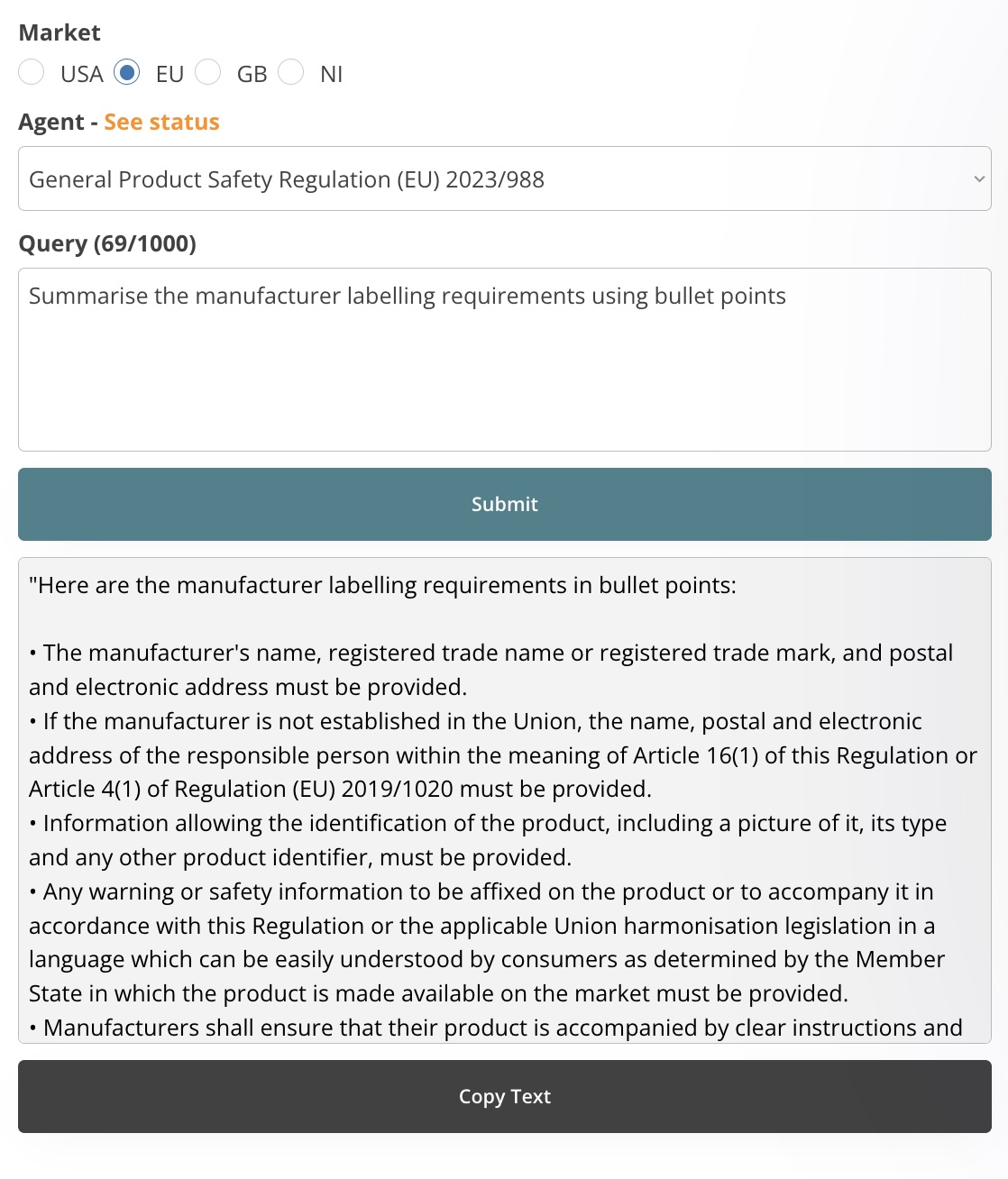

2. You can ask the agent to provide a response using a certain format.

Example: Summarise what I need to include in a Declaration of Conformity using bullet points

Example: Does the directive cover pet toys? (explain why it does or doesn’t)

3. Use terminology relevant to the regulation and/or product for more accurate responses.

4. Try to frame queries in different ways to explore different angles.

5. Vague or open-ended questions tend to result in lower-quality responses.

How should the AI agents be used?

1. Each AI agent is connected to a knowledge base, which includes a set of source texts. You can ask questions to explore compliance requirements related to connected source texts.

2. You can ask questions from different angles to better understand certain aspects of the requirements.

3. The AI tool does not verify if the output is correct. As such, you must never rely on or act on the AI-generated output as a primary source of information. Carefully read the latest version of the relevant source text (i.e., regulatory text or guidance page) before taking any action.

4. You can find the source texts for all AI agents here.

AI agent limitations

1. The AI agents do not think or understand the generated output.

2. The AI agents cannot fact-check, interpret, or understand the generated output.

3. The AI agents generate a response by predicting the next word based on mathematical probability. Think of it as an advanced autocomplete software.

4. The AI agents can only generate a relevant output to the extent that the knowledge base contains information sufficient for an “answer”. However, regulations and guidance documents do not address every single question, situation, or scenario. However, it may still generate an output based on irrelevant sources.

5. There is a limit to the quantity of text that an LLM can process. Hence, the agent may not be able to include every single piece of information from the knowledge base that would be required for sufficiently accurate output.

6. The AI agents can make mistakes when selecting knowledge base sources, and source items. This can result in an incorrect output being generated.

Recommended process

Step 1: Write your question/query

Step 2: Reframe your question a few times – Is the output consistent?

Step 3: Compare the output to the source text – Does it match or can you find errors?

Risks

1. The LLM can output incomplete information.

2. The LLM can hallucinate and output false information.

Data

1. The AI tool does not operate as an archive or file storage service. You are solely responsible for the backup of generated outputs and other safeguards appropriate for your needs.

2. The AI tool sends the user prompt to an external data processor. Read the Privacy Policy for more details

3. We keep a copy of the prompts and generated answers in the external data process for 7 days. This is only done for debugging reasons, in case we find issues with the AI tool. After 7 days, this data is deleted.